- Spark Driver Port

- Spark Driver

- Spark Delivery Driver Reviews

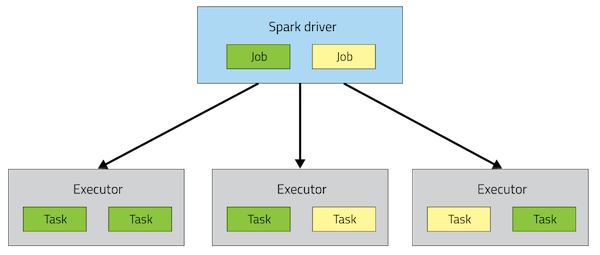

- Spark Driver Executor

- Spark Driver Application

Limit of total size of serialized results of all partitions for each Spark action (e.g. Should be at least 1M, or 0 for unlimited. Jobs will be aborted if the total size is above this limit. Having a high limit may cause out-of-memory errors in driver (depends on spark.driver.memory and memory overhead of objects in JVM). With the Spark Driver App, you will help bring smiles to many busy families as you monetize your spare time and empower yourself to be your own boss. Still on the fence? Through the Spark Driver. Spark Amp Editor App Update History / Release Notes (Android) Usage. Importing Playlists from Apple Music/Spotify & Auto Chord Usage. Spark Windows ASIO Driver. Spark Firmware Update. Spark Firmware Release Notes. USB Device/ASIO Driver not recognized in Windows. SPARK 16 gasoline model ( manual ), LS, L. The third level quality part. The second level quality part. It is of average miles and normal condition for its age. The highest quality part. It is of low miles and/or exceptional condition for its age. Though I’m satisfied with the current gigs I’m working, it doesn’t hurt to try them all. Press play to see what it is you need to become a Spark delivery dri.

You can connect business intelligence (BI) tools to Azure Databricks Workspace clusters and SQL Analytics SQL endpoints to query data in tables. This article describes how to get JDBC and ODBC drivers and configuration parameters to connect to clusters and SQL endpoints. For tool-specific connection instructions, see Business intelligence tools.

Permission requirements

The permissions required to access compute resources using JDBC or ODBC depend on whether you are connecting to a cluster or a SQL endpoint.

Workspace cluster requirements

To access a cluster, you must have Can Attach Topermission.

If you connect to a terminated cluster and have Can Restart permission, the cluster is started.

SQL Analytics SQL endpoint requirements

To access a SQL endpoint, you must have Can Usepermission.

If you connect to a stopped endpoint and have Can Use permission, the SQL endpoint is started.

Step 1: Download and install a JDBC or ODBC driver

For all BI tools, you need a JDBC or ODBC driver to make a connection to Azure Databricks compute resources.

- Go to the Databricks JDBC / ODBC Driver Download page.

- Fill out the form and submit it. The page will update with links to multiple download options.

- Select a driver and download it.

- Install the driver. For JDBC, a JAR is provided which does not require installation. For ODBC, an installation package is provided for your chosen platform.

Step 2: Collect JDBC or ODBC connection information

To configure a JDBC or ODBC driver, you must collect connection information from Azure Databricks. Here are some of the parameters a JDBC or ODBC driver might require:

Spark Driver Port

| Parameter | Value |

|---|---|

| Authentication | See Get authentication credentials. |

| Host, port, HTTP path, JDBC URL | See Get server hostname, port, HTTP path, and JDBC URL. |

The following are usually specified in the httpPath for JDBC and the DSN conf for ODBC:

| Parameter | Value |

|---|---|

| Spark server type | Spark Thrift Server |

| Schema/Database | default |

Authentication mechanism AuthMech | See Get authentication credentials. |

| Thrift transport | http |

| SSL | 1 |

Get authentication credentials

This section describes how to collect the credentials supported for authenticating BI tools to Azure Databricks compute resources.

Username and password authentication

You can use a Azure Databricks personal access token or an Azure Active Directory (Azure AD) token. Databricks JDBC and ODBC drivers do not support Azure Active Directory username and password authentication.

- Username:

token - Password: A user generated token. The procedure for retrieving a token for username and password authentication depends on whether you are using an Azure Databricks Workspace cluster or a SQL Analytics SQL endpoint.

- Workspace:

- Azure Databricks personal access token: Get a personal access token using the instructions in Generate a personal access token.

- Azure Active Directory token: Databricks JDBC and ODBC drivers 2.6.15 and above support authentication using an Azure Active Directory token. Get a token using the instructions in Get an access token.

- SQL Analytics personal access token: Get a personal access token using the instructions in Generate a personal access token.

- Workspace:

Get server hostname, port, HTTP path, and JDBC URL

The procedure for retrieving JDBC and ODBC parameters depends on whether you are using an Azure Databricks Workspace cluster or a SQL Analytics SQL endpoint.

In this section:

Workspace cluster

Click the icon in the sidebar.

Click a cluster.

Click the Advanced Options toggle.

Click the JDBC/ODBC tab.

Copy the parameters required by your BI tool.

SQL Analytics SQL endpoint

Click the icon in the sidebar.

Click an endpoint.

Click the Connection Details tab.

Copy the parameters required by your BI tool.

Step 3: Configure JDBC URL

The steps for configuring the JDBC URL depend on whether you are using an Azure Databricks Workspace cluster or a SQL Analytics SQL endpoint.

In this section:

Workspace cluster

Username and password authentication

Replace <personal-access-token> with the token you created in Get authentication credentials. For example:

Azure Active Directory token authentication

Remove the existing authentication parameters (

AuthMech, UID, PWD):Add the following parameters, using the Azure AD token obtained in Get authentication credentials.

Also see Refresh an Azure Active Directory token.

SQL Analytics SQL endpoint

Replace <personal-access-token> with the token you created in Get authentication credentials. For example:

Configure for native query syntax

JDBC and ODBC drivers accept SQL queries in ANSI SQL-92 dialect and translate the queries to Spark SQL. If your application generates Spark SQL directly or your application uses any non-ANSI SQL-92 standard SQL syntax specific to Azure Databricks, Databricks recommends that you add ;UseNativeQuery=1 to the connection configuration. With that setting, drivers pass the SQL queries verbatim to Azure Databricks.

Configure ODBC Data Source Name for the Simba ODBC driver

The Data Source Name (DSN) configuration contains the parameters for communicating with a specificdatabase. BI tools like Tableau usually provide a user interface for entering theseparameters. If you have to install and manage the Simba ODBC driver yourself, you might need tocreate the configuration files and also allow your Driver Manager(ODBC Data Source Administrator on Windows and unixODBC/iODBC on Unix) to access them. Create two files: /etc/odbc.ini and /etc/odbcinst.ini.

In this section:

/etc/odbc.ini

Username and password authentication

Set the content of

/etc/odbc.inito:Set

<personal-access-token>to the token you retrieved in Get authentication credentials.Set the server, port, and HTTP parameters to the ones you retrieved in Get server hostname, port, HTTP path, and JDBC URL.

Azure Active Directory token authentication

Set the content of

/etc/odbc.inito:Set

<Azure AD token>to the Azure Active Directory token you retrieved in Get authentication credentials.Set the server, port, and HTTP parameters to the ones you retrieved in Get server hostname, port, HTTP path, and JDBC URL.

Also see Refresh an Azure Active Directory token.

/etc/odbcinst.ini

Set the content of /etc/odbcinst.ini to:

Set <driver-path> according to the operating system you chose when you downloaded the driver in Step 1:

- MacOs

/Library/simba/spark/lib/libsparkodbc_sbu.dylib - Linux (64-bit)

/opt/simba/spark/lib/64/libsparkodbc_sb64.so - Linux (32-bit)

/opt/simba/spark/lib/32/libsparkodbc_sb32.so

Configure paths of ODBC configuration files

Specify the paths of the two files in environment variables so that they can be used by the Driver Manager:

where <simba-ini-path> is

- MacOS

/Library/simba/spark/lib - Linux (64-bit)

/opt/simba/sparkodbc/lib/64 - Linux (32-bit)

/opt/simba/sparkodbc/lib/32

Refresh an Azure Active Directory token

An Azure Active Directory token expires after 1 hour. If the access token is not refreshed using the refresh_token, you must manually replace the token with a new one. This section describes how to programmatically refresh the token of an existing session without breaking the connection.

- Follow the steps in Refresh an access token.

- Update the token as follows:

- JDBC: Call

java.sql.Connection.setClientInfowith the new value forAuth_AccessToken. - ODBC: Call

SQLSetConnectAttrforSQL_ATTR_CREDENTIALSwith the new value forAuth_AccessToken.

- JDBC: Call

Troubleshooting

See Troubleshooting JDBC and ODBC connections.

-->Note

As of Sep 2020, this connector is not actively maintained. However, Apache Spark Connector for SQL Server and Azure SQL is now available, with support for Python and R bindings, an easier-to use interface to bulk insert data, and many other improvements. We strongly encourage you to evaluate and use the new connector instead of this one. The information about the old connector (this page) is only retained for archival purposes.

The Spark connector enables databases in Azure SQL Database, Azure SQL Managed Instance, and SQL Server to act as the input data source or output data sink for Spark jobs. It allows you to utilize real-time transactional data in big data analytics and persist results for ad hoc queries or reporting. Compared to the built-in JDBC connector, this connector provides the ability to bulk insert data into your database. It can outperform row-by-row insertion with 10x to 20x faster performance. The Spark connector supports Azure Active Directory (Azure AD) authentication to connect to Azure SQL Database and Azure SQL Managed Instance, allowing you to connect your database from Azure Databricks using your Azure AD account. It provides similar interfaces with the built-in JDBC connector. It is easy to migrate your existing Spark jobs to use this new connector.

Download and build a Spark connector

The GitHub repo for the old connector previously linked to from this page is not actively maintained. Instead, we strongly encourage you to evaluate and use the new connector.

Spark Driver

Official supported versions

| Component | Version |

|---|---|

| Apache Spark | 2.0.2 or later |

| Scala | 2.10 or later |

| Microsoft JDBC Driver for SQL Server | 6.2 or later |

| Microsoft SQL Server | SQL Server 2008 or later |

| Azure SQL Database | Supported |

| Azure SQL Managed Instance | Supported |

The Spark connector utilizes the Microsoft JDBC Driver for SQL Server to move data between Spark worker nodes and databases:

The dataflow is as follows:

- The Spark master node connects to databases in SQL Database or SQL Server and loads data from a specific table or using a specific SQL query.

- The Spark master node distributes data to worker nodes for transformation.

- The Worker node connects to databases that connect to SQL Database and SQL Server and writes data to the database. User can choose to use row-by-row insertion or bulk insert.

The following diagram illustrates the data flow.

Build the Spark connector

Currently, the connector project uses maven. To build the connector without dependencies, you can run:

Comartsystem Korea. Download drivers for sound cards for free. Operating System Versions: Windows XP, 7, 8. Are you tired of looking for the drivers for your devices? DriverPack Online will find and install the drivers you need automatically. Download DriverPack Online for. Download v-gear sound cards & media devices driver windows 7. DOWNLOAD DRIVE MEDIATEK 7; sy-p8 sound card; AUDIMAX DUAL; AP-5.1 Driver for Windows download; AST110DVD MOBILE AUDIO; mediatek audio; dddd@shenzhen.net.cn loc:PL; driver for sound card audimax 5.1 usb; smart c208 driver free download; media tek opinie; octane mediatek 5.1. Download Sound Card Drivers & Multimedia Device Drivers Sound card drivers and multimedia device drivers are available to be downloaded for free for the Soft32 site. Visit the site today to take advantage! software, free download Soft32.com.

- mvn clean package

- Download the latest versions of the JAR from the release folder

- Include the SQL Database Spark JAR

Connect and read data using the Spark connector

You can connect to databases in SQL Database and SQL Server from a Spark job to read or write data. You can also run a DML or DDL query in databases in SQL Database and SQL Server.

Read data from Azure SQL and SQL Server

Read data from Azure SQL and SQL Server with specified SQL query

Write data to Azure SQL and SQL Server

Run DML or DDL query in Azure SQL and SQL Server

Connect from Spark using Azure AD authentication

You can connect to Azure SQL Database and SQL Managed Instance using Azure AD authentication. Use Azure AD authentication to centrally manage identities of database users and as an alternative to SQL Server authentication.

Connecting using ActiveDirectoryPassword Authentication Mode

Setup requirement

If you are using the ActiveDirectoryPassword authentication mode, you need to download azure-activedirectory-library-for-java and its dependencies, and include them in the Java build path.

Spark Delivery Driver Reviews

Connecting using an access token

Setup requirement

If you are using the access token-based authentication mode, you need to download azure-activedirectory-library-for-java and its dependencies, and include them in the Java build path.

See Use Azure Active Directory Authentication for authentication to learn how to get an access token to your database in Azure SQL Database or Azure SQL Managed Instance.

Write data using bulk insert

The traditional jdbc connector writes data into your database using row-by-row insertion. You can use the Spark connector to write data to Azure SQL and SQL Server using bulk insert. It significantly improves the write performance when loading large data sets or loading data into tables where a column store index is used.

Spark Driver Executor

Next steps

If you haven't already, download the Spark connector from azure-sqldb-spark GitHub repository and explore the additional resources in the repo:

Spark Driver Application

You might also want to review the Apache Spark SQL, DataFrames, and Datasets Guide and the Azure Databricks documentation.